Joint Estimation of Deformation and Shading for Dynamic Texture Overlay

We developed a dynamic texture overlay method to augment non-rigid surfaces in single-view video. We are particularly interested in retexturing non-rigid surfaces, whose deformations are difficult to describe, such as the movement of cloth. Our approach to augment a piece of cloth in a real video sequence is completely image-based and does not require any 3-dimensional reconstruction of the cloth surface, as we are rather interested in convincing visualization than in accurate reconstruction. We blend the virtual texture into the real video such that its deformation in the image projection as well as lighting conditions and shading in the final image remain the same. For this purpose, we need to recover geometric parameters that describe the deformation of the projected surface in the image plane. Without a 3-dimensional reconstruction of the surface we cannot explicitly model the light conditions of the scene. However, we can recover the impact of the illumination on the intensity of a scene point. Therefore, we model shading or changes in the lighting conditions in additional photometric parameters that describe intensity changes in the image and treat the problem of recovering geometric and photometric parameters for realistic retexturing as an image registration task solving for a warp that not only registers two images spatially, but also photometrically. We formulate a cost function based on an extended optical flow constraint allowing not only for geometric but also for brightness changes in the image. From the photometric parameters a shading map is estimated and applied to the new texture warped with the geometric parameters. The synthetic texture is then blended into the original video via alpha blending at the mesh borders.

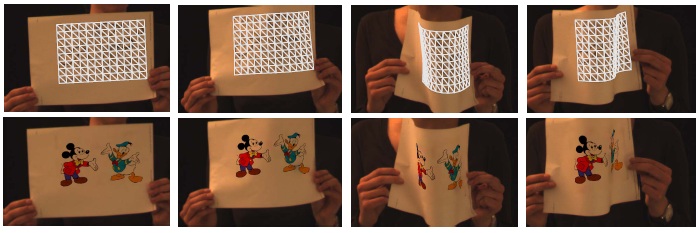

Tracking and augmenting a deforming piece of paper under self occlusion and illumination changes. The new texture is deformed and illuminated with the recovered shading map.

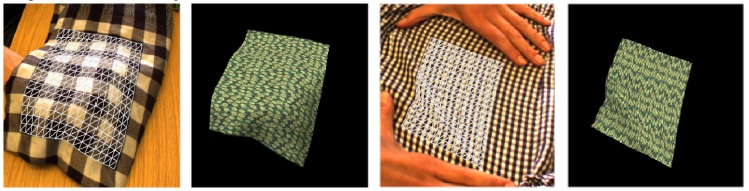

Tracking and retexturing cloth with different characteristics. Note that the mesh is purely 2-dimensional and the 3-dimensional illusion comes from shading.

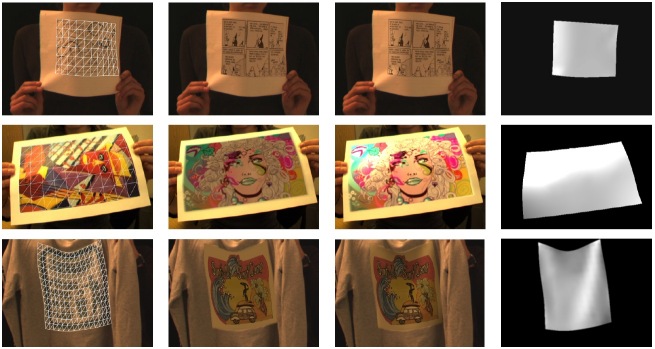

Original frame with overlayed mesh, retexturing without shading map retexturing with shading map, shading map.

Handling External and Self-Occlusions

Objects occluding the real surface have to be taken into account as on the one hand they affect the parameter estimation and on the other hand should also occlude the virtually textured surface. We treat self-occlusions and external occlusions separately. External occlusions are treated as outliers and are spared out from parameter estimation as these regions should not contribute to the mesh deformation. In these regions the mesh is assumed to be smooth and the deformation is driven by the smoothing constraints. Self-occlusions, however, influence the mesh deformations.

Our approach to external occlusion handling is twofold. First, we use a robust estimator in the optimization procedure instead of a least-squares estimator which detects occluded pixels and reweights them in the resulting error functional. Second, we establish a dense occlusion map specifying which texture points of the deforming surface are visible and which are occluded. This occlusion map is established from local statistical color models of texture surface patches and a global color model of the occluding object hat is built during tracking.

Estimated occlusion maps.

Self-occlusions have to be handled in 2-dimensional deformation estimation as, naturally, a 2-dimensional mesh folds in the presence of self-occlusion. In our approach, the optical flow field is regularized with a 2-dimensional mesh-based deformation model. The formulation of the deformation model contains weighted smoothing constraints defined locally on topological vertex neighborhoods We address the problem of self-occlusions by weighting the smoothness constraints locally according to the occlusion of a region. Thereby, the mesh is forced to shrink instead of fold in occluded regions. Occlusion estimates are established from shrinking regions in the deformation mesh.

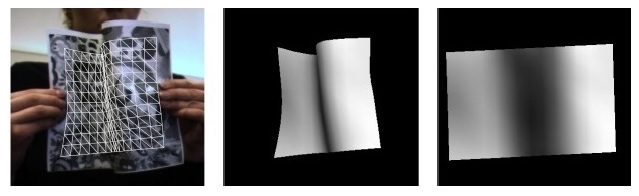

Original frame with overlayed mesh, occlusion maps on the deformed and undeformed surface.

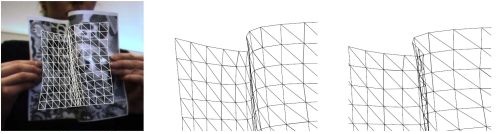

From left to right: original frame, detail fo the mesh with and without self-occlusion handling.

Videos

Selected Publications

A. Hilsmann, D. Schneider, P. Eisert,

Realistic Cloth Augmentation in Single View under Occlusions, Computers & Graphics, 2010. [PDF]

A. Hilsmann, P. Eisert,

Joint Estimation of Deformable Motion and Photometric Parameters in Single View Video, ICCV Workshop on Non-Rigid Shape Analysis and Deformable Image Alignment NORDIA 2009, Kyoto, Japan, Sept. 2009. [PDF]

A. Hilsmann, P. Eisert,

Tracking Deformable Surfaces with Optical Flow in the Presence of Self Occlusions in Monocular Image Sequences, CVPR Workshop on Non-Rigid Shape Analysis and Deformable Image Alignment NORDIA 2008, Anchorage, Alaska, June 2008. [PDF]