Interactive Volumetric Video

Photo-realistic modeling, real-time rendering and animation of humans is still difficult, especially in virtual or augmented reality applications. Even more, for an immersive experience, interaction with virtual humans plays an important role. Classic computer graphics models are highly interactive but usually lack realism in real-time applications. With the advances of volumetric studios, the creation of high-quality 3D video content for free viewpoint rendering on AR and VR glasses allows highly immersive viewing experiences, which is, however, limited to experience pre-recorded situations. In this paper, we go beyond the application of free-viewpoint volumetric video and present a new framework for the creation of interactive volumetric video content of humans as well as real-time rendering and streaming. Re-animation and alteration of an actor’s performance captured in a volumetric studio becomes possible through semantic enrichment of the captured data and new hybrid geometry- and video-based animation methods that allow a direct animation of the high-quality data itself instead of creating an animatable model that resembles the captured data.

Our approach allows gaze correction, i.e. in order to follow a user in a VR environment.

Overview

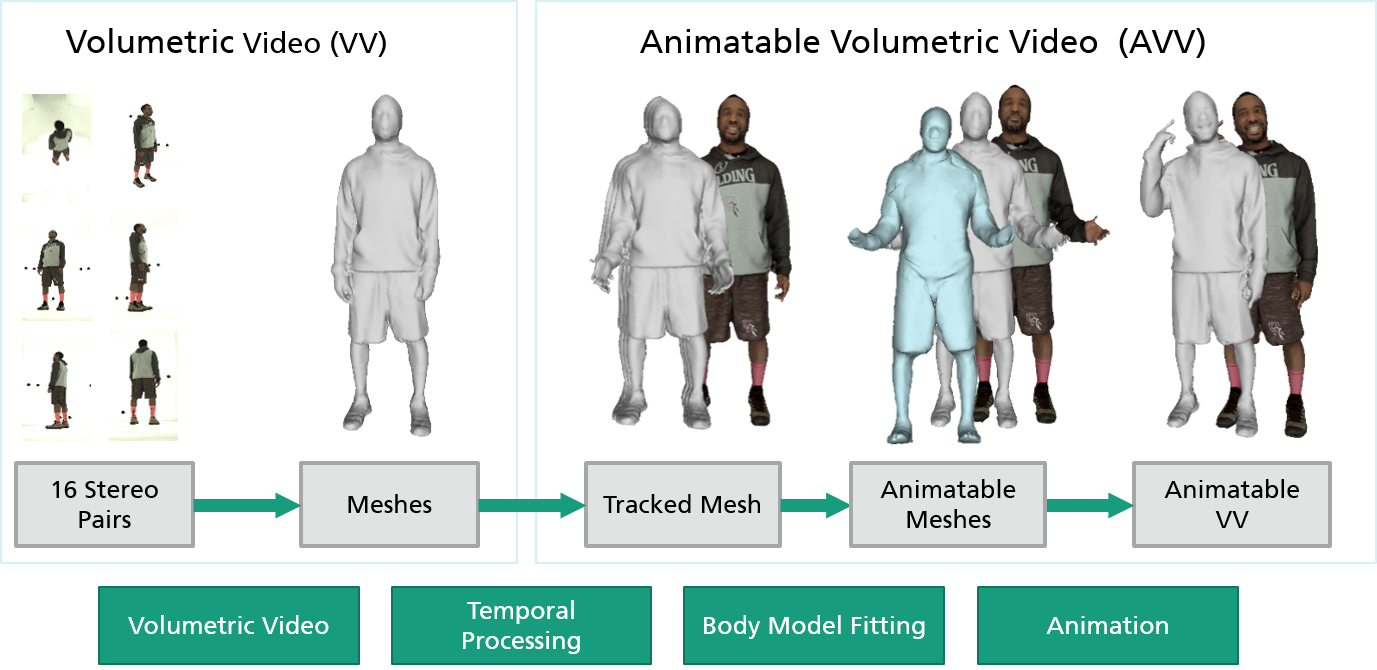

The pipeline starts with the creation of high-quality volumetric video content (free viewpoint video) by capturing an actor’s performance in a volumetric studio and computing a temporal sequence of 3D meshes. The reconstruction of temporally inconsistent meshes is converted into spatio-temporally coherent mesh (sub-) sequences using template-based approaches in order to facilitate texturing and compression. In order to allow for topological changes during the sequence, we use a key-frame-based method to decompose the captured sequence into subsequences and register a number of key-frames to the captured data. The final dynamic textured 3D reconstructions can then be inserted as volumetric video assets in virtual environments and viewed from arbitrary directions (free viewpoint video). In order to make the captured data animatable, we learn a parametric rigged human model and fit it to the captured data. Through this process, we enrich the captured data with semantic pose and animation data taken from the parametric model, which can then be used to drive the animation of the captured volumetric video data itself.

End-to-end pipeline for the creation of animatable volumetric video content.

Hybrid Animation

The captured content contains real deformations and poses and we want to exploit this data for the generation of new performances as much as possible. For this purpose, we propose a hybrid example-based animation approach that exploits the captured data as much as possible and only minimally animates the captured data in order to fit the desired poses and actions. As the captured volumetric video content consists of temporally consistent subsequences, these can be treated as essential basis sequences containing motion and appearance examples. Given a desired target pose sequence, the semantic enrichment of the volumetric video data allows us to retrieve the subsequence(s) or frames closest to the sequence. These can then be concatenated and interpolated in order to form new animations, similar to surface motion graphs. Such generated sequences are restricted to poses and movements already present in the captured data and might not perfectly fit the desired poses. Hence, after we have created a synthetic sequence from the original data that resembles the target sequence as closely as possible, we now animate and kinematically adapt the recomposed frames in order to fit the desired poses.

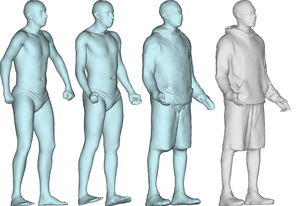

Model adaptation to volumetric video data.

The kinematic animation of the individual frames is facilitated through the body model fitted to each frame. For each mesh vertex, the location relative to the closest triangle of the template model is calculated, virtually gluing the mesh vertex to the template triangle with constant distance and orientation. With this parameterization between each mesh frame and the model, an animation of the model can directly be transferred to the volumetric video frame. The classical approach would have been to use the body model fitted to best represent the captured data in order to synthesize new animation sequences. In contrast, we use the pose optimized and shape adapted template model to drive the kinematic animation of the volumetric video data itself. As the original data contains all natural movements with all fine details and the original data is exploited as much as possible, our animation approach produces highly realistic results.

Publications

W. Morgenstern, A. Hilsmann, P. Eisert

Progressive Non-rigid Registration of Temporal Mesh Sequences, Proc. 16th European Conference on Visual Media Production (CVMP), London, United Kingdom, December 2019. [URL]

A. Hilsmann, P. Fechteler, W. Morgenstern, W. Paier, I. Feldmann, O. Schreer, P. Eisert

Going beyond Free Viewpoint: Creating Animatable Volumetric Video of Human Performances, IET Computer Vision, Special Issue on Computer Vision for the Creative Industries, May 2020. [URL], [arXiv]

W. Paier, A. Hilsmann, P. Eisert

Interactive Facial Animation with Deep Neural Networks, IET Computer Vision, Special Issue on Computer Vision for the Creative Industries, May 2020.

P. Eisert, A. Hilsmann

Hybrid Human Modeling: Making Volumetric Video Animatable, In: Real VR - Digital Immersive Reality, Springer, pp. 158-177, February 2020.

S. Gül, D. Podborski, A. Hilsmann, W. Morgenstern, P. Eisert, O. Schreer, T. Buchholz, T. Schierl, C.Hellge

Interactive Volumetric Video from the Cloud, International Broadcasting Convention (IBC), Amsterdam, Netherlands, September 2020.